Amazon DynamoDB Pre-Warming: Why It Matters

It’s a dark, cold night in Ireland, and you are wrapped up warm in bed when a violent eruption of buzzing on wood adjacent to your head awakens you. Yes, it’s the work mobile phone; it's somewhere in the early hours when even the earliest of risers would find the current clock striking abhorrent.

You wearily answer the call, and the voice on the other end of the line is that of a sprightly fellow somewhere on the other side of the globe, tucking into his afternoon hot beverage, telling you there is an issue in production and operations cannot fix it - they need you.

The rush of adrenaline gives you the fight-or-flight response you need to instantaneously invert your position from horizontal to vertical.

You are up, stumbling in the dark for the light switch to illuminate your way to the computer like a Precision Approach Path Indicator guiding a plane in to land.

Once at your desk, you begin the process of logging in - but yet to be fully alert, it’s only on your third attempt of trying that you manage to get the series of random characters and numbers correct.

Your password is highly secure, but at this point in time, you wish it resembled something more fitting a late digital adopter’s attempt at a fail-safe phrase intended not to be forgotten, rather than any attempt to make it uncrackable.

But, you are in. The video conference link is beaming at you from the unread instant message chat, calling you to click and join the team in this endeavor. With a deep breath, you hover the mouse to highlight the URL, you click, and enter what will become a lesson in Amazon DynamoDB that will stick with you long after the issue is resolved.

So, what happened on that frightful night oh so many years ago?

I was working as an architect on a project which had just gone into production, making use of DynamoDB for the first time in the organization’s history. It was a single table design to hold a key-value item which had several attributes, including a free text field.

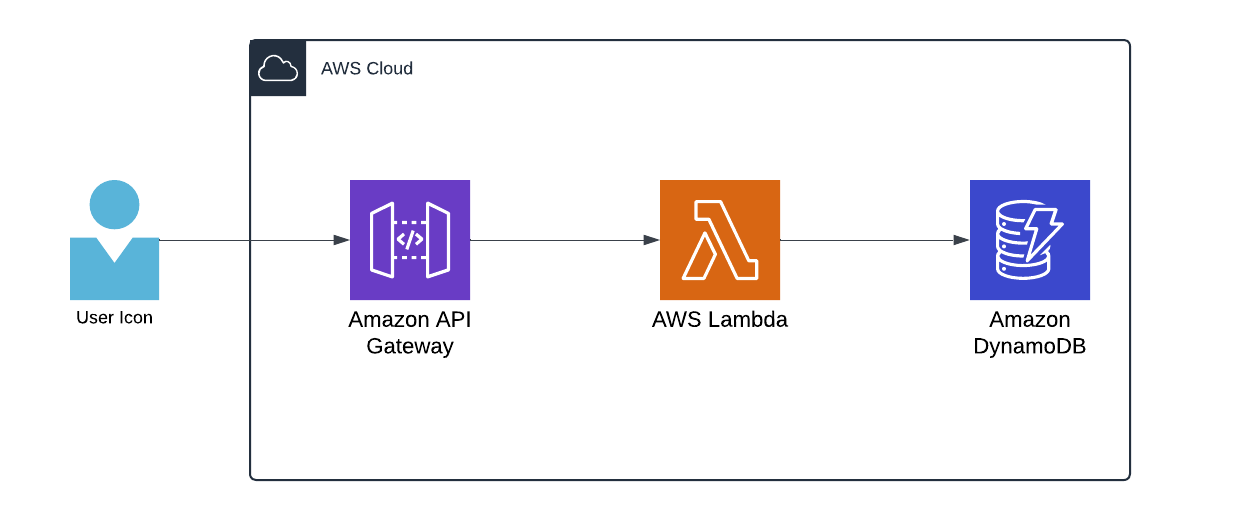

It was being populated using an API call through Amazon API Gateway with an AWS Lambda proxy. This was our first attempt at serverless event-driven architecture, and it appeared to tick all the boxes of the well-architected framework.

Development, testing, and integration in the lower environments had gone smoothly.

Amazon DynamoDB had lived up to its promises, and the business value had been unlocked. However, the table was now alarming through Amazon CloudWatch, throttling exceptions being returned to users, and data was not being written to the table. Did we really have that many users?

The table was in provisioned capacity mode; we had 2x the capacity we needed as a fail-safe, and a fraction of users had been onboarded to the new product. There were only tens of requests per second. We designed for this - we should be able to handle this type of load easily.

Back to Basics

To fully understand what was happening in this case, we have to go back to basics.

DynamoDB capacity is based on consumption units of Read Capacity Units (RCUs) and Write Capacity Units (WCUs). In DynamoDB, read requests can be either strongly consistent, eventually consistent, or transactional.

A strongly consistent read request of an item up to 4 KB requires one read unit, an eventually consistent read request of an item up to 4 KB requires one-half read unit, and a transactional read request of an item up to 4 KB requires two read units.

A write unit represents one write for an item up to 1 KB in size. If you need to write an item that is larger than 1 KB, DynamoDB needs to consume additional write units.

So far, so good.

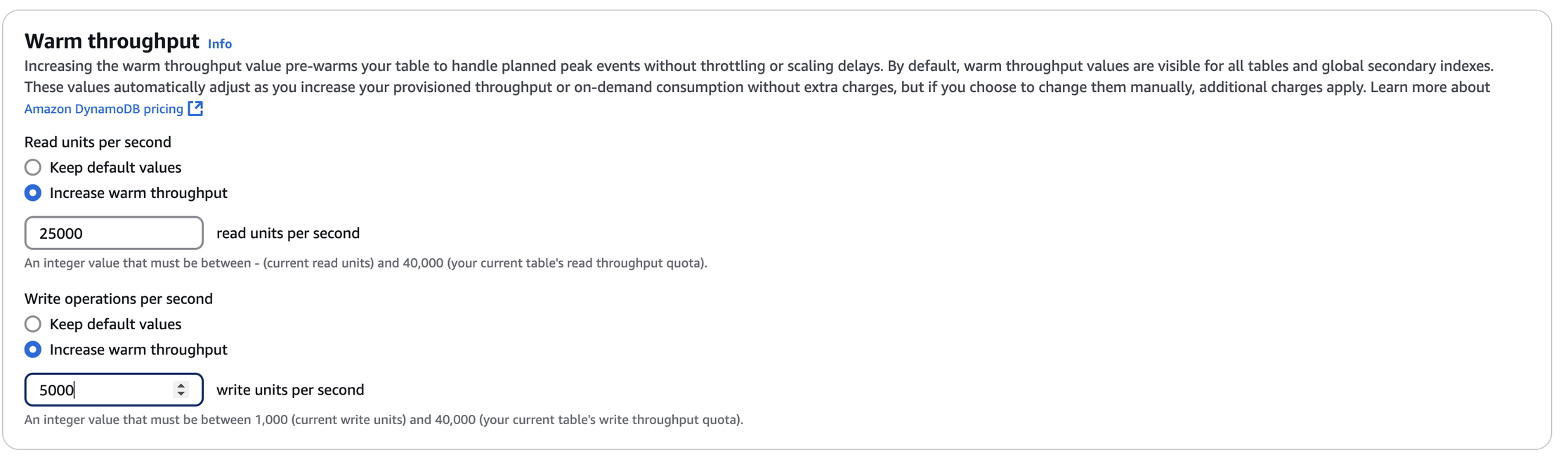

We knew that we had an item with a free text attribute where a user could enter anything they liked, but we had limited the total size of an item to 250 KB - well under the maximum item size of 400 KB. Our table was provisioned with 5000 WCUs and 25000 RCUs. We were servicing at most 10 write requests a second with minimal to no reads according to the logs.

Our write capacity unit consumption was:

250 KB x 10 RPS = 2500 WCUs

We were operating at half our table capacity, and we had a partition key with extremely high cardinality. In fact, it was a GUID. So, why were we getting throttled when trying to write to the table?

Time to call AWS support.

What we learned on the call was that DynamoDB has limits at the partition level when it comes to WCUs and RCUs.

So, what is a DynamoDB Partition?

Amazon DynamoDB stores data in partitions. A partition is an allocation of storage for a table, backed by solid-state drives (SSDs) and automatically replicated across multiple Availability Zones within an AWS Region. Partition management is handled entirely by DynamoDB—you never have to manage partitions yourself.

- AWS Documentation

A partition is around 10 GB in size, and the item partition key is hashed to then place the data on the underlying storage.

What are the limits of RCU and WCU at the Partition level?

Capacity units are limited to 3000 RCUs and 1000 WCUs at the partition level.

What did this mean for us?

When we created the table in provisioned mode, DynamoDB had effectively created 9 partitions to meet our RCU and WCU capacity units at the table level. Despite our high cardinality, write requests were landing on the same partition, which only had a maximum capacity of 1000 WCU.

Our throttling was being caused by a couple of large items being close to the 250 KB size we had enforced. More than 4 requests to the same partition with close to our max item size (4 RPS x 250 KB = 1000 WCUs) would cause a throttling event, as we exceeded the write capacity units for the partition.

Ultimately, the table would scale out to meet our needs at the partition level, but this does take time, and for the meantime, we would continue to be throttled at the partition level.

In this case, the solution to our late-night conundrum was to now over-provision the capacity units on the table and allow this to complete. This would split our table into more partitions, then once the table has been provisioned with this additional capacity, we could change the capacity again to our lower values. DynamoDB would not regather these partitions.

Instead, they would remain, even though our table capacity was lowered. This would then allow the requests to be written over more partitions - distributing our load more evenly across the table. And we would only be billed for our provisioned capacity.

This was a manual intervention by us after the event, but this is where DynamoDB pre-warming now comes in.

What is pre-warming Amazon DynamoDB tables with warm throughput?

Warm throughput is a new feature of DynamoDB released in late 2024 which can give you insight into the read and write operations your DynamoDB tables can immediately support. You can set this level on table creation or modify it at any time asynchronously. Using this feature, you can scale out your DynamoDB table to create more underlying partitions to handle your traffic volumes.

This is effectively the same solution we implemented on the fateful night in question by increasing our write capacity and read capacity units directly beyond our table limits to force DynamoDB to partition and scale. Now, you can use the warm throughput feature to do this directly… and hopefully, you’ll get more sleep than I did.